LLM-PBE: Assessing Data Privacy

in Large Language Models

Published in VLDB 2024

Best Research Paper Nomination!

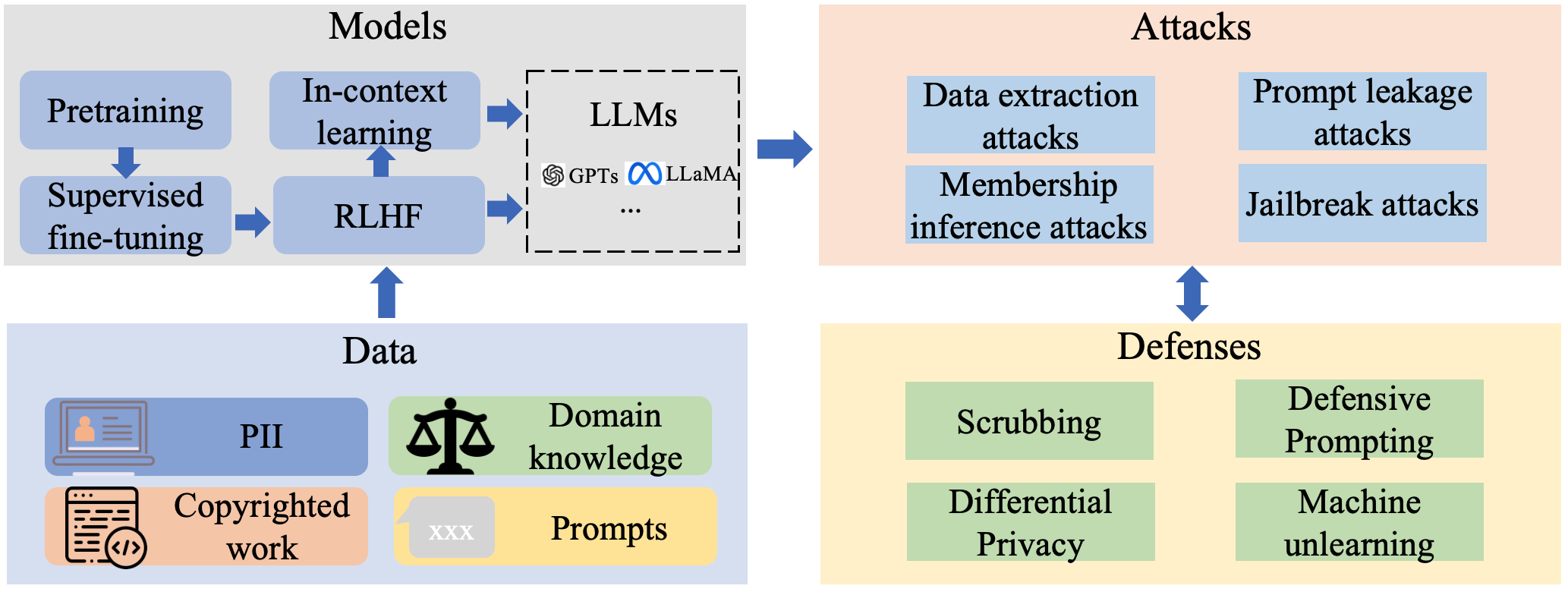

A toolkit crafted specifically for the systematic evaluation of data privacy risks in LLMs, incorporating diverse attack and defense strategies, and handling various data types and metrics.

WARNING: This paper contains model outputs that may be considered offensive.